Harmonizing technology and tradition: Analysis of grade VI mathematics midterm exam questions

DOI:

https://doi.org/10.31629/jg.v9i2.7464Keywords:

question quality, classical test theory, validity, reliability, distractor effectivenessAbstract

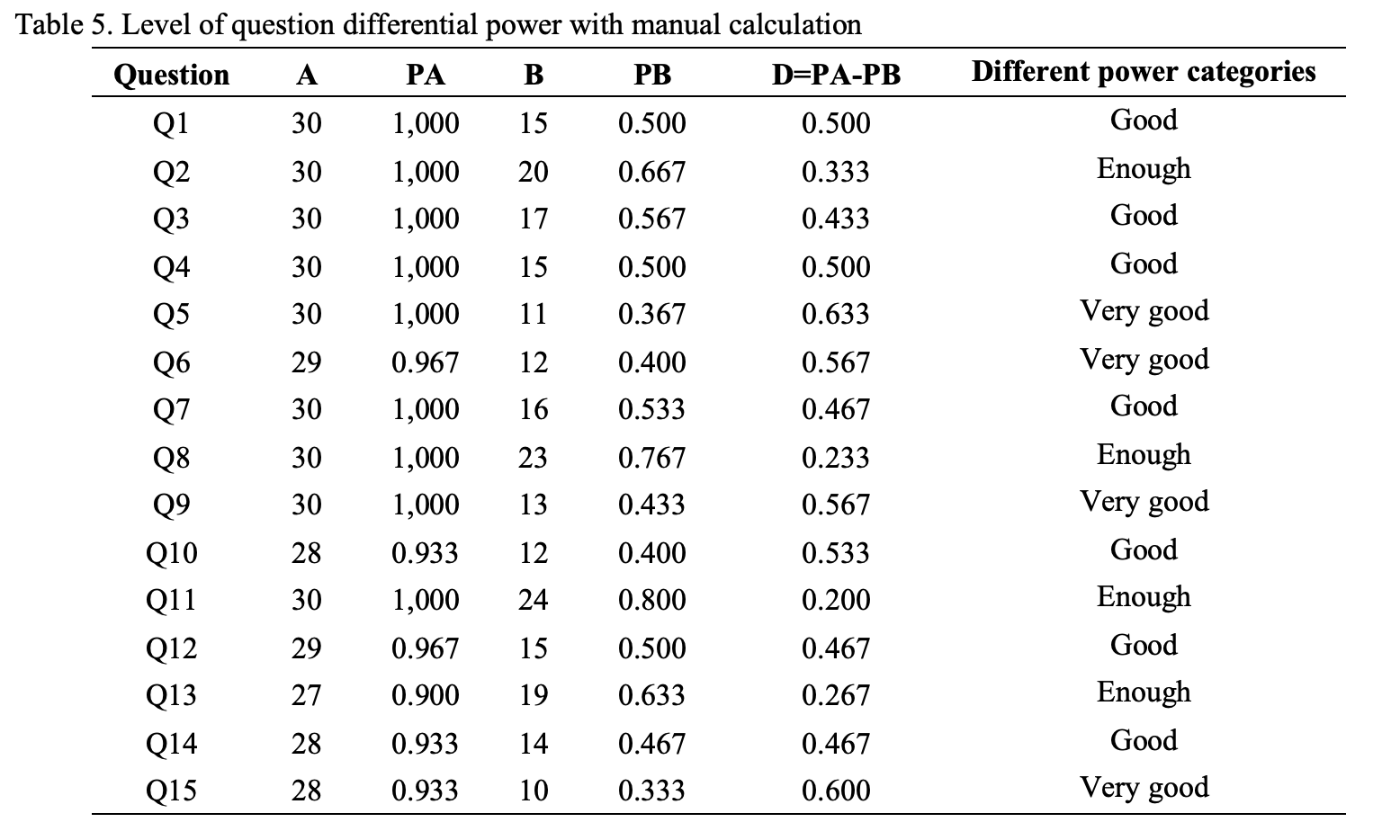

In education, evaluation instruments such as tests are important tools to measure students' abilities objectively. However, unbalanced question quality, such as too easy difficulty levels or ineffective distractors, can reduce the validity and reliability of the test in distinguishing participants' abilities. This study analyzed the quality of the midterm test Mathematics questions for Grade VI at Sekolah Prestasi Global. Analysis using CTT with the help of SPSS and JMetrik allows for a more detailed evaluation of the quality of the questions. The source of research data was the results of 111 students' answers to 25 Midterm Exam Questions, with 15 multiple-choice questions analyzed further, consisting of 10 multiple-choice questions and five true-false questions. The results showed that most of the UTS Mathematics questions for Grade VI were relatively easy (80% with a difficulty level >0.70), so they were less effective in distinguishing participants' abilities. Only three questions were in the ideal difficulty level category (0.31–0.70), while most distractors were ineffective with low or zero discrimination values. Although the overall reliability of the question was good (Cronbach's Alpha 0.763), improvements in test quality are recommended through question revisions, distractor improvements, and a more balanced distribution of difficulty levels. This study concludes that the quality of the Grade VI Mathematics UTS question at Sekolah Prestasi Global needs to be improved through revision of easy questions, improvement of distractors, and a more balanced distribution of difficulty levels to produce a test instrument that is more valid, reliable, and able to evaluate participants' abilities accurately.

Downloads

References

Anastasi, A., & Urbina, S. (1997). Psychological Testing. Prentice Hall.

Azwar, S. (2012). Reliability and Validity. Student Library.

Bagiyono, B. (2017). Analysis of Difficulty Level and Distinguishing Power of Level 1 Radiography Training Exam Questions. WIDYANUKLIDA , 16(1), 1–12.

Cappelleri, J.C., Jason Lundy, J., & Hays, R.D. (2014). Overview of classical test theory and question response theory for the quantitative assessment of questions in developing patient-reported outcome measures. Clinical Therapeutics, 36(5), 648–662. https://doi.org/10.1016/j.clinthera.2014.04.006

Crocker, L.M. & Algina, J. (2008). Introduction to Classical and Modern Test Theory. Cengage Learning.

Cronbach, L. J., & Meehl, P. E. (1955). Construct Validity in Psychological Tests. Psychological Bulletin, 52(4).

Ebel, R.L., & Frisbie, D.A. (1991). Essentials of Educational Measurement. Englewood Cliffs, NJ: Prentice-Hall.

Fiska, JM, Hidayati, Y., Qomaria, N., & Hadi, WP (2021). Analysis of Question Questions

Science Daily Test Using Anates Software in the Classical Test Theory Approach. Journal of Natural Science Educational Research, 4(1), 2021.

Ghozali, I. (2018). Multivariate Analysis Application with IBM SPSS Program. Diponegoro University.

Gulliksen, H. (1950). Theory of Mental Tests. John Wiley & Sons.

Hambleton, R. K., & Swaminathan, H. (1985). Question Response Theory: Principles and Applications. Springer.

Haladyna, T. M., & Downing, S. M. (1989). Validity of a Taxonomy of Multiple-Choice Question-Writing Rules. Applied Measurement in Education, 2(1), 51–78.

Hayati, S., & Lailatussaadah, L. (2016). Validity and Reliability of Active, Creative and Enjoyable Learning Knowledge Instrument (PAKEM) Using Rasch Model. Scientific Journal of Didaktika, 16(2), 169. https://doi.org/10.22373/jid.v16i2.593

Iskandar, A., & Rizal, M. (2018). Analysis of Question Quality in Higher Education Based on TAP Application. Journal of Educational Research and Evaluation, 22(1), 12. https://doi.org/10.21831/pep.v22i1.15609

Kadir, A. (2015). Compiling and Analyzing Learning Outcome Tests. Al-Ta'dib: Journal of Educational Science Studies, 8(2), 70–81. https://doi.org/10.31332/atdb.v8i2.411

Lestari, K., & Yudhanegara, M. (2017). Mathematics Education Research. Bandung: PT Refika Aditama.

LeBeau, B., Assouline, S. G., Mahatmya, D., & Lupkowski-Shoplik, A. (2020). Differentiating Among High-Achieving Learners: A Comparison of Classical Test Theory and Question 30 Response Theory on Above-Level Testing. Gifted Child Quarterly, 64(3), 219–237. https://doi.org/10.1177/0016986220924050 .

Mania, S. (2008). Non-Test Techniques: A Review of the Function of Interviews and Questionnaires in Educational Evaluation. Lentera Pendidikan: Journal of Tarbiyah and Teacher Training , 11(1), 45–54. https://doi.org/10.24252/lp.2008v11n1a4

McDonald, R.P. (1999). Test Theory: A Unified Treatment. Lawrence Erlbaum Associates.

Nunnally, J. C., & Bernstein, I. H. (1994). Psychometric Theory. McGraw-Hill.

Nuswowati, M., Binadja, A., Soeprodjo, S., & Ifada, KEN (2010). The Effect of Validity and Reliability of Final Semester Exam Questions in Chemistry Study Field on Competency Achievement. Journal of Chemical Education Innovation, 4(1), 566 –573.

Perdana, S. (2018). Analysis of the Quality of Measurement Instruments for Understanding the Concept of Quadratic Equations through Classical Test Theory and Rasch Model. Jurnal Kiprah , 6(1), 41–48. https://doi.org/10.31629/kiprah.v6i1.574

Rahayu, R., & Djazari, M. (2016). Analysis of the Quality of Pre-National Examination Questions for the Subject of Accounting Economics. Indonesian Journal of Accounting Education, 14(1). https://doi.org/10.21831/jpai.v14i1.11370

Spearman, C. (1904). General Intelligence, Objectively Determined and Measured. American Journal of Psychology, 15(2), 201–292.

Sumintono, B., & Widhiarso, W. (2015). Application of Rasch Modeling in Educational Assessment. Cimahi: Trim Komunikata.

Sundayana, R. (2014). Educational Research Statistics. Alphabet

Suseno, I. (2017). Comparative Characteristics of Multiple Choice Test Questions Reviewed from Classical Test Theory. Scientific Journal of Education Factors, 4(1), 1–8

Syofian. (2015). Quantitative Research Methods Complete with Comparison of Manual Calculations & SPSS. Jakarta: Prenamedia Group.

Thurstone, L.L. (1938). Primary Mental Abilities. University of Chicago Press.

Trakman, G.L., Forsyth, A., Hoye, R., & Belski, R. (2017). The Nutrition for Sport Knowledge Questionnaire (NSKQ): Development and Validation Using Classical Test Theory and Rasch Analysis. Journal of the International Society of Sports Nutrition, 14(1). https://doi.org/10.1186/s12970-017-0182-y

Tri Wahyuningsih, E. (2015). Analysis of Objective Test Questions Made by Teachers for Odd Semester Exams for Grade X Economics Subjects at SMA Negeri 1 Mlati in the 2013/2014 Academic Year. Yogyakarta State University. Retrieved from http://eprints.uny.ac.id/id/eprint/26627

Wahyudi, W. (2010). Portfolio-Based Learning Assessment in Schools. Journal of Vision of Educational Sciences, 2(1), 288–296. https://doi.org/10.26418/jvip.v2i1.370

Widyaningsih, SW, & Yusuf, I. (2018). Analysis of Physics Laboratory Module Questions for School I Using the Rasch Model. Gravity: Scientific Journal of Physics Research and Learning, 4(1). https://doi.org/10.30870/gravity.v4i1.3116

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 Jurnal Gantang

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.